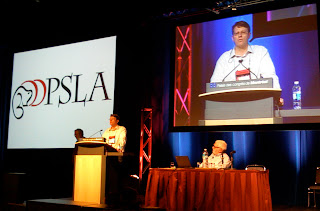

ooPSLA 2007 in Montreal, Canada - Oct. 21 to Oct. 25, 2007

(this is the part 4a of my braindump on ooPSLA 2007. I decided to split part 4 into two parts since I generally like reading blog posts that fit into one screen page of my laptop and imagine a majority of blog readers feel the same. Please see part 1, part 2, and part 3)

In addition to various paper sessions and one big poster session, like most week-long conferences, ooPLSA includes a wide variety of workshops, co-located symposiums, and tutorials. Unlike many other conferences, these secondary sessions are attended and instructed by the leaders in the field and many times by the progenitors of the technologies and ideas.

These other sessions attract industry participants and thus create a good mix of attendees. For instance, this year, while waiting in line to get lunch, I met developers from one of the big US Banks and they swear by ooPSLA and one has attended the past five years. He had managed to 'infect' other colleagues and they are now a group of three attending this year.

Workshops, symposium, and tutorialDSM/DSL workshopSince my recent research centers around using the Ruby on Rails platform to create a domain-specific language (DSL) for Web APIs mashups, I had to attend the domain-specific modeling/language workshop at ooPSLA. I did not have a chance to submit a workshop paper so I attended as a regular participant. Overall, the workshop spanned three days, which I think is a bit much; I only attended the first day. The AM session papers were a mixed of incomplete DSL and approaches to create applications using DSL and DSM. After a nice lunch near old Montreal, where I got to have lunch with some of the organizers, we reconvene for the PM sessions which were deeper and also seemed more mature, as there was a variety of demos and more in-depth discussions.

Overall, I thought the majority of the papers dealt with visual DSLs, that is environments that encourage or facilitate application constructions with visual representations of the DSL. While I realize that there is a growing class of users (non-programmer types) who need to create applications and that one can target with such visual DSLs, I also convinced (based on experience) that visual languages are better suited for very narrow problems and do not scale well. My experience using VisualAge C++ (circa 1996) and then VisualAge Java (circa 1997) have left bitter tastes in my mouth when it comes to visual programming environment. I was able to build a complex application that we shipped to our customers, however, because the application was non-trivial, only a handful of developers could modify and debug it. My conclusion at the time was that

the visual code can be harder than textual code. I did not see anything from the presentations at the workshop that convinced me that a significant breakthrough was achieved. I would agree that for some subset of problems and users, a visual environment is very productive and attractive, however, I think it's best to layer the visual tools on top of a textual DSL or a platform supporting a textual language, e.g., Ruby, Rails, Python, Java, or C#

LINQ tutorial

The one tutorial I attended this year was by Eric Mejyer of Microsoft on LINQ. First off, I only attended a part of the tutorial, it was scheduled as an all day affair on the first day of the conference and I was at the DSM/DSL session in the AM. I had met Eric the night before at the bar of the hotel and we had various interesting conversations along with other folks (e.g., Martin Fowler) on many topics. I knew Eric was giving the tutorial and told him I would come for at least part of it. The one thing I must mention right from the start is that Eric is a truly cool dude. I rarely can say this of Microsoft colleagues I have met, but Eric is just cool. He's a real asset to MS, not just for his many contributions, but also overall attitude that debunks the

MS engineer stereotype, i.e., "the typically arrogant, I know most everything and the world runs on MS platform" types.

(Photo credit: self with iPhone of Eric Mejyer of Microsoft at the Hyatt Montreal hotel lobby)Microsoft's Language INtegrated Query (LINQ) has been in research in development for a while now. In the recent version of Visual Studio 2008 and the C# 3.0 language, LINQ is now a prominent feature. In a nutshell LINQ adds new syntax, semantics, and libraries to the C# language (and really the .NET framework) to support direct query and manipulation of relational data. In other words, using LINQ in your C# and other .NET enabled languages, you can do simple to very complex queries of relational data or other data structures directly in your code. For instance this is some LINQ C# code to do some simple queries:

//Assume customers is a C# (or .NET) collection of Customers

//this can be in memory populated or loaded from a relational DB

Customers[] female_customers = from c in customers

where c.sex.ToLower() == "female"

|| c.sex.ToLower() == 'f'

orderby c.age

select c;

//Another example

string[] cities = {"San Jose, CA", "New York, NY",

"San Francisco, CA", "Miami, FL",

"Raleigh, NC", "Portland, OR",

"Seattle, WA"};

string[] cities_in_california = from city in cities

where city.EndsWith("CA")

select city;

(you should easily be able to infer the SQL equivalent, though the

EndsWith() and

ToLower() selection operators maybe slightly tricky...)

Software Engineering Radio is running a blog

episode 72: Erik Meijer on LINQ where he explains the basic. MSDN host a dated but still interesting video of

Anders Hejlsberg discussing LINQ and C#.

However, note that unlike in various DB drivers and language wrappers (e.g., JDBC) or even ORMs (e.g., Rails' ActiveRecord) you never deal with SQL and all results and intermediary variables are C# objects and classes. MS added various new additions to C# and .NET framework to make that happen. From my limited understanding of LINQ (based solely on taking half of Eric's class), it appears that under the cover, the LINQ queries are translated into calls to the .NET LINQ libraries which are heavily templated C# classes to represent various generic version of queries. I am guessing that the same magic occurs for regular data structures when you use them in LINQ queries. Under the covers, I am also guessing that the LINQ .NET engine does appropriate "magic" and generate optimized SQL for the database. Naturally, these optimization will depend on what database you have and not simply the DB driver you installed. Therefore, I would venture to guess that on MS SQL this will work well and dandy but your mileage will likely vary if you use other DBs, e.g., MySQL, DB2, or Oracle. Since I am not sure 100% on previous statement, please don't quote me on that.

Generally, I am a firm believer in having a uniform representation of all parts of a software system. That is, reduce the impedance mismatch that occurs as one integrates different parts of a system. For instance, in typical Web applications development, the application logic is in some language and framework (e.g., Java or C#) but the user interface is coded in HTML, CSS, and JavaScript. Same also for the data when in a relational database. The various frameworks have facilities to reduce the impedance mismatch, however, for databases, even the best frameworks (e.g., Ruby on Rails' ActiveRecord) show their weaknesses when you need to do any moderately complex queries (e.g., a query that involves a couple of joins). What typically occurs is that the database binding layer will simply have a pass-thru that allows SQL to be directly submitted. The promise and beauty of LINQ is that the impedance mismatch is almost completely removed since you only deal with the language in question, say C#, and can represent all data elements and queries thereof directly in the language.

This is all good stuff. However, enter some dose of skepticism. I already identified the first problem and that is using MS .NET and LINQ, I wonder how much that ties you to MSQL since for any decently complex DB application you'll start needing the query optimizer and if that is not ported to work with the LINQ engine then forget anything but a complete MS solution.

The second issue I could see is one I had discussed with Eric. He has not convinced me otherwise. It is simply that there is potential for abuse by developers using LINQ. What I mean is that the relational database community has spent a number of years exploring and addressing the limits of the relational model such that it is now well understood and can scale really well. These advancement in great part have enabled the current Web commerce boom we have seen in the past 10 to 15 years. Show me one decently large commerce or Web application and I will point you to an example deployment of relational database. By bypassing SQL with a language that is not a proper subset or equivalently researched you may loose the benefits aforementioned.

Finally, since as far as I know, LINQ is not a standard or submitted to a standard body, though I know that there is an effort to create a Java version named jLINQ, I worry how much longer this will remains a Microsoft-only technology. So I realize that MS invented it but that does not mean it should not be a proposed standard which could allow it to be added to various languages and importantly allow various DB vendor to fully support it in various settings... I am likely dreaming a bit here, however, imagine if IBM and others had not created the SQL standards. What would have come of the database market? What about all the nice advances mentioned earlier?

Dynamic Language Symposium

I attended part of the DLS this year, as I had ooPLSA 2005. It's an interesting bunch since many of the originators of early OO languages are in attendance, e.g., Dave Ungar (visiting researcher at IBM Research), Mark Miller (Google), Jim Hugunin (Microsoft), and many others.

The talks I attended varied from very practical to esoteric. For instance, Jim Hugunin had a very interesting and practical talk on the new

.NET dynamic language runtime (DLR) which allows .NET to host (relatively easily) various dynamic languages. Jim was the one to give us JPython (now Jython) so it was reasonable for him to implement Python on the DLR and share some of the results. Cool thing is that Python works and performs well on .NET---without a thorough study, he mentioned it was twice as good as Jython on one Java VM. He also mentioned that there was effort to also move JavaScript and Ruby to the DLR. Interestingly as in any ports, there are subtle issues that come up, especially how to integrate the .NET library and support it in the language. Not sure if MS plans to share some of the DLR spec or ideas with the rest of the world, however, this may be a glimpse into the future... A portable, solid, and performing virtual machine that can host efficiently various dynamic languages. (The JRuby folks are thinking along that line as well, see Headnius blog on the topic). Kudos to Microsoft for advancing this agenda though at the same time it worries me since I doubt this will ever

fully work on Linux or the Mac. One esoteric talk was RPython which essentially allowed Python code to be typed... weird though I can see the statically typed heads inflating and smiling.

(Photos credit: self with iPhone of Dave Ungar (creator of Self) of IBM Research and Mark Miller of Google asking questions during keynotes)

Parting thoughts

Parting thoughts